Kubernetes has become the go-to platform for container orchestration, especially for companies implementing microservices. The Kubernetes Health Checks provide a standard for monitoring and ensuring the health and availability of your services and serve as a conceptual framework to build on.

In this tutorial, we’ll explore how to configure health checks using Edge Stack, a popular Kubernetes-native API gateway and specialized control plane for Envoy Proxy, that provides a simple way to configure and manage APIs for microservices. By configuring Active Health Checks using Edge Stack API Gateway, you can ensure your microservices are healthy and can serve traffic.

Let’s dive in by first understanding how basic Kubernetes health checks work. Then we’ll examine Edge Stack’s Basic Health Checks and Active Health Checks. Finally, we will discuss how Edge Stack expands on the existing approach to give you more control and customization capabilities.

Types of Kubernetes health check

Performing a Kubernetes health check is important to ensure the application runs as it should and is ready to accept traffic. In this section, we will look at some of the health checks we can configure in Kubernetes in more detail.

Liveness probes

Kubernetes uses liveness probes to determine when to restart a container. For example, if a pod is stuck on a long-running process, its liveness probes will fail, and Kubernetes will restart the container to get the pod back to a working state.

To configure liveness probes for a Kubernetes pod, you can use the exec, httpGet, tcpSocket, or grpc methods. The configuration for each of these can be found on the official Kubernetes documentation.

For example, you can configure an exec probe to check for the existence of a file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: liveness-test

spec:

selector:

matchLabels:

app: liveness-test

template:

metadata:

labels:

app: liveness-test

spec:

containers:

- name: liveness-test

image: alpine

args:

- "/bin/sh"

- "-c"

- "touch /tmp/ready; sleep 10; rm -rf /tmp/ready; sleep 600;"

livenessProbe:

exec:

command:

- cat

- /tmp/ready

- "if [ ! -f /tmp/ready ]; then exit 1; fi"

initialDelaySeconds: 5

periodSeconds: 5

resources:

limits:

memory: "128Mi"

cpu: "500m"Here, we are configuring the liveness probe, which uses the exec method to execute the cat /tmp/ready command every 5 seconds after an initial delay of 5 seconds. The application creates this file at startup and deletes it 10 seconds later so we can simulate a situation where the probe fails, and the initialDelaySeconds parameter tells Kubernetes to start the first probe after 5 seconds. The application will be marked as running initially but will be restarted by Kubernetes when the command probe fails.

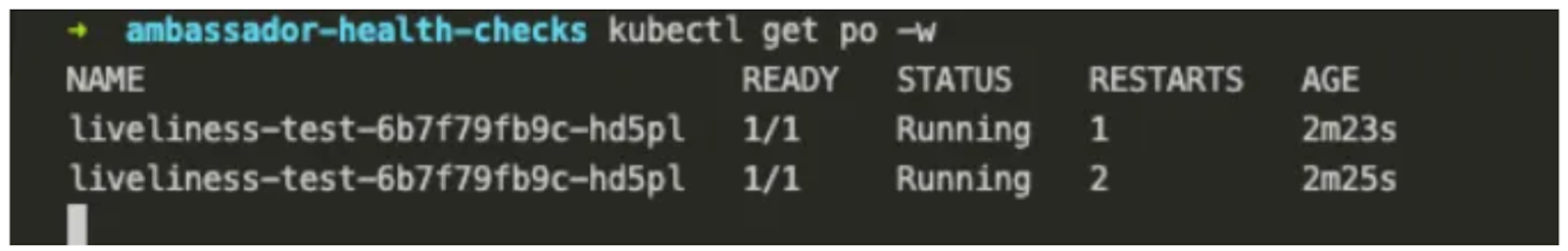

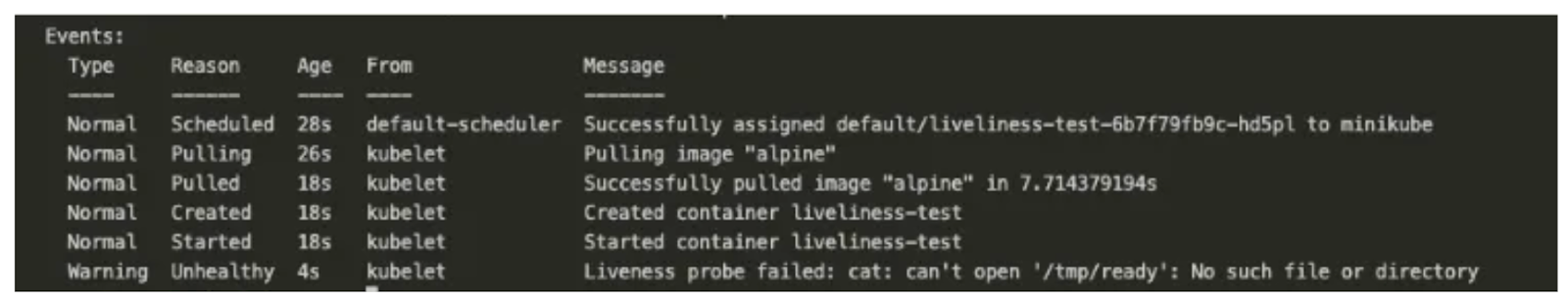

If we describe the pod, we should see an event telling us the liveness probe failed.

As shown above, a liveness probe can be a command, an HTTP request, a TCP probe, or a gRPC probe.

Readiness probes

Readiness probes, using the HTTP protocol, are used to determine whether a pod is ready to serve network traffic. If a pod’s readiness probes fail, the pod is removed from the services load balancer. The configuration for a readiness probe is similar to that of the liveness probes. Take a look at the example below to understand how readiness probes are configured:

readinessProbe:

exec:

command:

- cat

- /tmp/ready

initialDelaySeconds: 5

periodSeconds: 5Startup probes

Kubernetes uses startup probes to determine when the containers in the pod have started. For example, the liveness and readiness probes of containers that take time to start may fail because the application hasn’t even started yet, and the pod will keep getting restarted. The configuration for a startup probe is similar to the liveness and readiness probes. See an example below:

startupProbe:

exec:

command:

- cat

- /tmp/ready

initialDelaySeconds: 5

periodSeconds: 5Configuring Basic health checks on Edge Stack

As mentioned, Edge Stack health checks are conceptually similar to Kubernetes Health Checks but are based on Envoy technology. Edge Stack allows you to configure health checks for each service with a simple YAML configuration.

Prerequisites

Before we begin, make sure you meet the following requirements:

- A running instance of Edge Stack

- Access to the Kubernetes command-line tool kubectl

- Basic knowledge of Kubernetes and YAML syntax

- Endpoint resolver configured for active health checking

Step 1: Define a health check probe

To configure a health check, you need to define a health check probe in your deployment file. The probe specifies a URL path and port number for Edge Stack to use when checking the health of the service. Here is an example of a probe definition:

apiVersion: v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app

image: my-image

ports:

- containerPort: 8080

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 5

periodSeconds: 5In this example, we have defined a health check probe for the my-app service that checks the /health endpoint on port 8080. The initialDelaySeconds parameter specifies how long to wait before the first health check, and the periodSeconds parameter specifies how often to perform subsequent health checks.

Step 2: Configure Edge Stack to use health checks

Next, you need to configure Edge Stack to use the health check probe for the service. This is done by defining a mapping resource file configuration. Here is an example:

apiVersion: getambassador.io/v3alpha1

kind: Mapping

metadata:

name: my-app-backend

spec:

hostname: "*"

prefix: /

service: my-app

health_checks:

- unhealthy_threshold: 5

healthy_threshold: 2

interval: "5s"

timeout: "10s"

health_check:

http:

path: /metrics

hostname: "*"

expected_statuses:

- max: 200

min: 200In this example, we have defined a health check for the my-app service using the /metrics endpoint on port 8080.

Step 3: Apply the changes

Finally, you need to apply the changes to the Kubernetes cluster using the command kubectl apply command as seen below:

kubectl apply -f deployment.yaml

kubectl apply -f service.yamlAfter applying the changes, you can check the status of the pods using the kubectl get pod command.

Configuring Active Health Checks

With the release of Edge Stack 3.4, the Ambassador’ team introduced Active Health Checking — this is different from the passive health checks where the circuit breakers monitor the response codes returned by the upstream service and will only stop traffic if the response codes exceed a predefined threshold.

The new Active Health Checking asynchronously originates an HTTP request to the upstream server. If the upstream server does not respond, the server is removed from the load balancing pool until it responds to a subsequent health check.

The active health check allows Envoy to independently verify the health of an upstream pod. This check is independent of any individual request. If it finds that an upstream cluster is no longer healthy, it will stop forwarding traffic to it until it is healthy, providing better guarantees of availability and readiness.

As noted in the Ambassador documentation, Active Health Checks require the Kubernetes endpoint resolver. This is required because the endpoint resolver allows Envoy to be aware of each pod in a deployment as opposed to the Kubernetes service resolver where Envoy is only aware of the upstream as a single endpoint. When Envoy is aware of the multiple pods in a deployment, then it will allow the active health checks to mark an individual pod as unhealthy while the remaining pods can serve requests. Both HTTP and gRPC health checks can be configured.

Here is an example:

apiVersion: getambassador.io/v3alpha1

kind: Mapping

metadata:

name: "example-mapping"

namespace: "example-namespace"

spec:

hostname: "*"

prefix: /example/

service: quote

health_checks: list[object]

- unhealthy_threshold: int

healthy_threshold: int

interval: duration

timeout: duration

health_check: object

http:

path: string

hostname: string

remove_request_headers: list[string]

add_request_headers: list[object]

- example-header-1:

append: bool

value: string

expected_statuses: list[object]

- max: int (100-599)

min: int (100-599)

- health_check: object

grpc:

authority: string

upstream_name: string These specifications allow you to fine-tune your health checks and provide enhanced controls for troubleshooting and monitoring.

Combining Edge Stack with AWS or GKE

You can combine Edge Stack API Gateway with AWS effectively without issues. For AWS, here are the steps to follow ⬇️

- Create an Amazon EKS cluster and worker nodes

- Install Ambassador Edge Stack in the cluster

- Create a LoadBalancer service in Kubernetes using the type: LoadBalancer and annotations: service.beta.kubernetes.io/aws-load-balancer-type: nlb parameters

- Configure the health checks using the ambassador annotation as described above.

- Test the health checks and troubleshoot any issues.

For more detailed instructions, you can refer to the AWS checklist.

Troubleshooting health checks on Edge Stack

If your health checks are not working as expected, here are some troubleshooting suggestions:

- Check the logs of the service to see if any errors or exceptions are being thrown.

- Verify that the probe endpoint is returning a valid HTTP response code (200–299).

- Check Edge Stack logs to see if there are any errors or warnings related to health checks.

- Ensure that the service is running on the specified port and that the port is open in the container.

- Verify that the health check configuration in the Kubernetes YAML file is correct.

Monitoring health checks on Edge Stack

To monitor the health checks on Edge Stack, you can use a variety of tools, including but not limited to:

- Prometheus: A popular open-source monitoring solution that can be used to collect and analyze metrics from Ambassador Edge Stack

- Grafana: A dashboarding and visualization tool that can be used to create custom dashboards to monitor health checks

- Datadog: A popular monitoring and analytics platform that can be used to collect and visualize metrics from Edge Stack and other Kubernetes components.

These tools can monitor your services’ health checks and ensure they are running correctly.

Conclusion

Thanks for sticking with me till the end of this tutorial. We looked at liveness, readiness, and startup probes in Kubernetes and how to configure health checks using Edge Stack. Configuring health checks for microservices is critical to ensuring their reliability and availability.

By utilizing Edge Stack API Gateway, you can monitor and manage your microservices’ health, ensuring they can efficiently handle incoming traffic. With the right configuration, you can minimize downtime and improve the overall user experience.