EKS clusterAWS (Amazon Web Services) is a cloud platform offering over 200 products across various technologies. Among them is Amazon Elastic Kubernetes Service (EKS), a tool for orchestrating containers.

Kubernetes is a platform for managing and deploying containerized applications. It is often used with container engines like Docker, CRI-O, and Containerd to enhance control over application deployment.

In this article, you’ll explore the Amazon EKS architecture and learn two ways to deploy a Kubernetes cluster on AWS — through the AWS console and from your local machine.

Prerequisites

This article assumes the reader has the following:

- An Amazon web services account

- AWS command-line interface (CLI)

- eksctl & kubectl

- VS Code and the Kubernetes VS Code extension

What is a Kubernetes Cluster?

A Kubernetes cluster is made up of nodes that run containerized applications. These nodes are divided into two main types:

- Master nodes (the control plane) manage the cluster and handle scheduling, scaling, and configuration.

- Worker nodes (the data plane) run the workloads — the containerized applications.

What is Amazon EKS?

Amazon Elastic Kubernetes Service (EKS) allows you to deploy, run, and scale Kubernetes both in the cloud and on-premises, without needing to operate or maintain your own Kubernetes control plane or nodes.

EKS manages your master nodes, pre-installs essential software (such as the container runtime and master processes), and provides tools for scaling and backing up your applications. This enables your team to focus on deploying and managing applications, rather than maintaining the infrastructure.

Understanding the Architecture of Amazon EKS

I included this section because understanding the architectural components of Amazon EKS will help you better grasp how Kubernetes clusters are deployed on AWS.

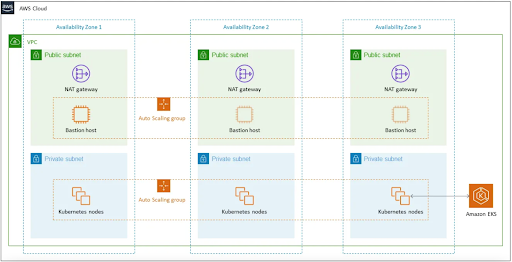

Looking at the Amazon EKS architectural diagram above, you’ll notice it consists of several components. Here’s a brief explanation of each:

- Availability Zone (AZ): These are isolated locations where AWS data centers reside. To ensure high availability and fault tolerance, a robust EKS architecture spans three availability zones, preventing failure if one zone goes down.

- Virtual Private Cloud (VPC): A virtual network that allows you to launch AWS resources using the scalable infrastructure of AWS.

- Public Subnet: A range of IP addresses accessible from the public internet.

- Private Subnet: A range of IP addresses that are isolated and not directly accessible from the internet.

- Network Address Translation (NAT) Gateway: Located in public subnets, these gateways enable resources in private subnets to access the internet for outbound traffic.

- Bastion Host: A Linux server placed in a public subnet (within an auto-scaling group) to allow secure SSH access to EC2 instances running in private subnets.

- Amazon EKS Cluster: The EKS cluster manages the Kubernetes control plane, which orchestrates the Kubernetes nodes in the private subnets.

From the diagram, you’ll observe that the Amazon EKS infrastructure spans across three availability zones. A VPC is configured with both public and private subnets in each zone. Each public subnet contains a Bastion host linked to the Bastion hosts in other availability zones. Similarly, the Kubernetes nodes in private subnets are interconnected across all availability zones to ensure efficient communication.

Deploying a Kubernetes Cluster to EKS Using the AWS Console

This method allows you to deploy a Kubernetes cluster on AWS without writing any code or using the CLI.

Steps:

- Create an AWS account if you don’t have one already.

- Sign in to the AWS Console to begin deploying your Kubernetes cluster.

After you’ve logged into the AWS console, choose your preferred availability zone, search for “VPC” on the search bar, and navigate to it.

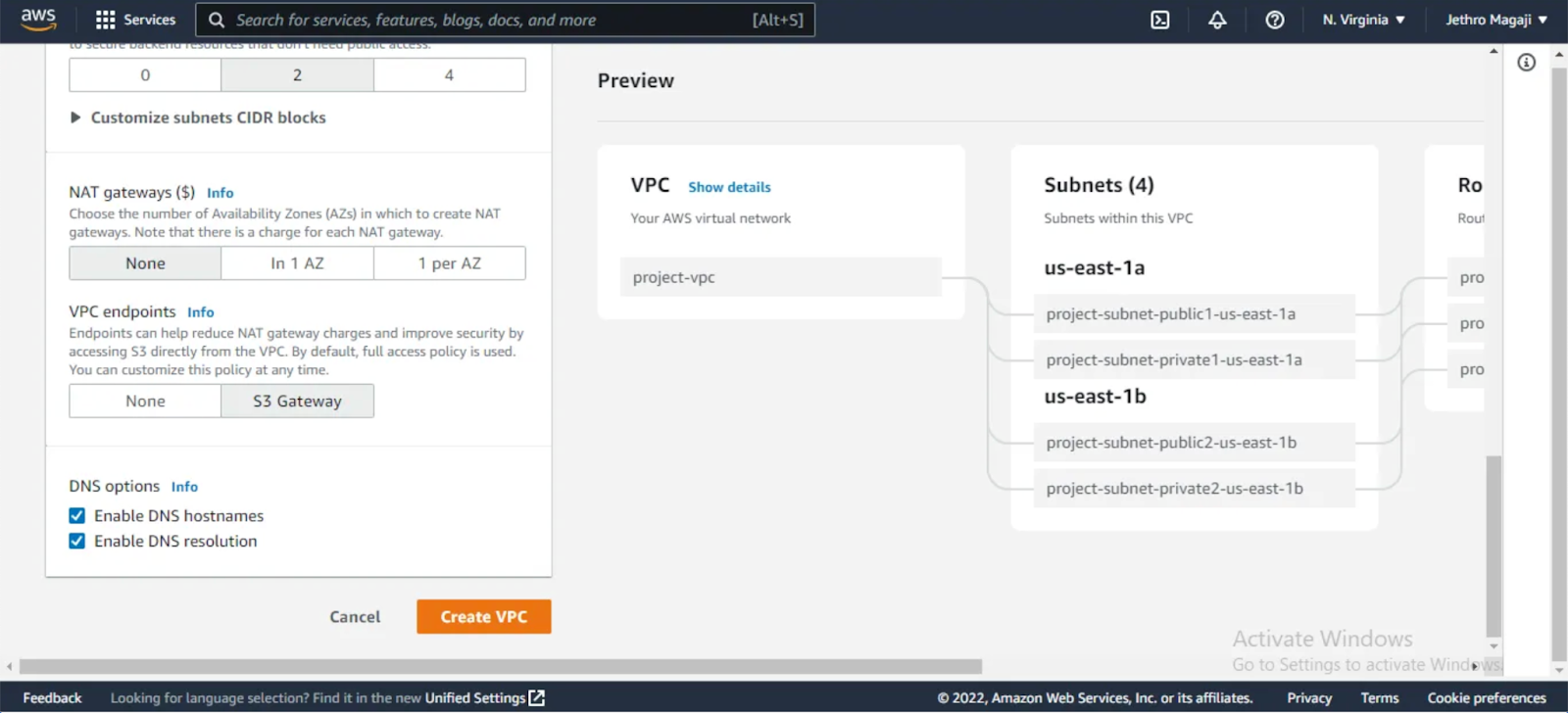

On the VPC dashboard, click on “Create VPC”, leave the default configuration as is, and then click on the “Create VPC” button to create a virtual private cloud with configured public and private subnets.

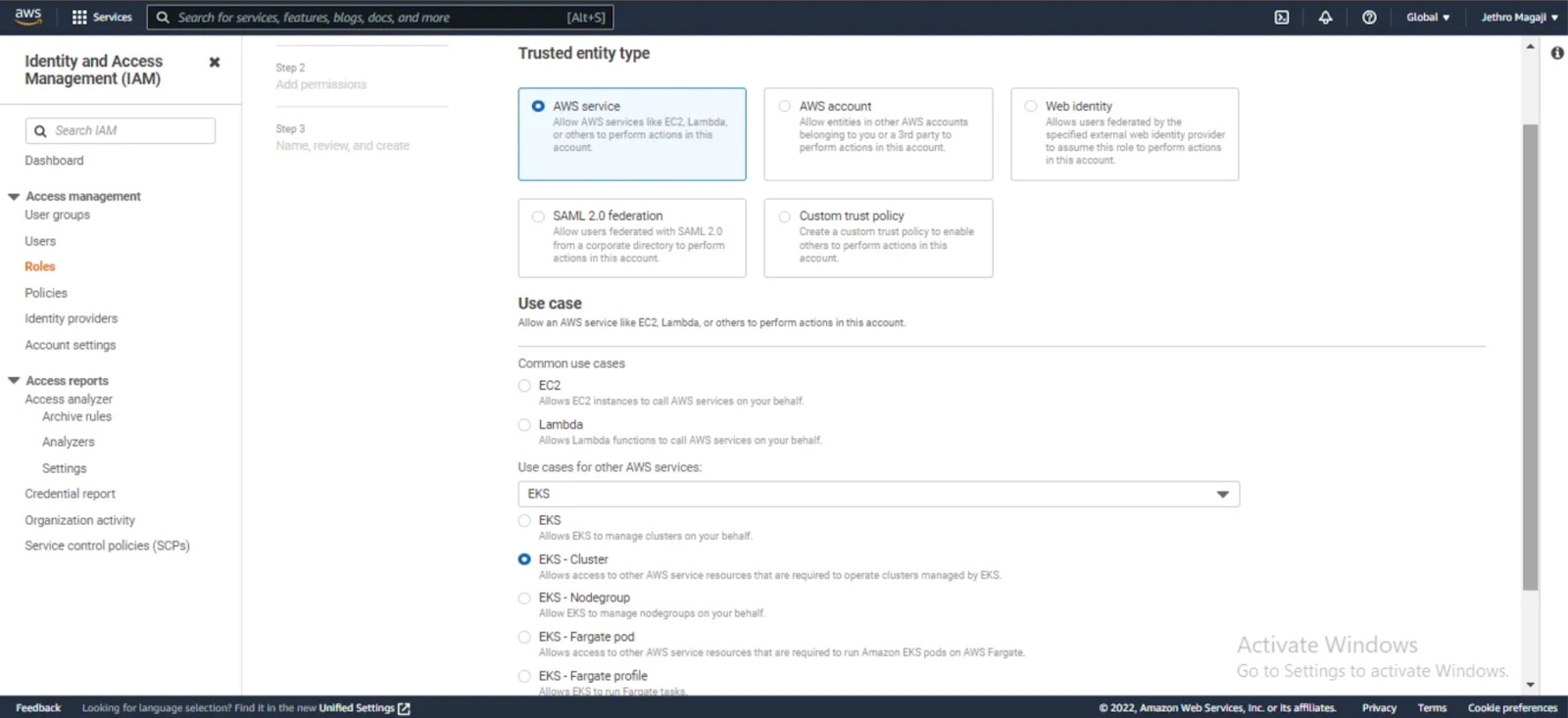

The next thing you’ll need to do is create an IAM role with security groups that give permission to work with EKS. To do this, search for Identity and Access Management (IAM) on the search bar and then navigate to it. On the IAM page, click on “AWS Service” -> “EKS cluster” -> “Next” to add the required permissions, a name, and complete the IAM role creation process.

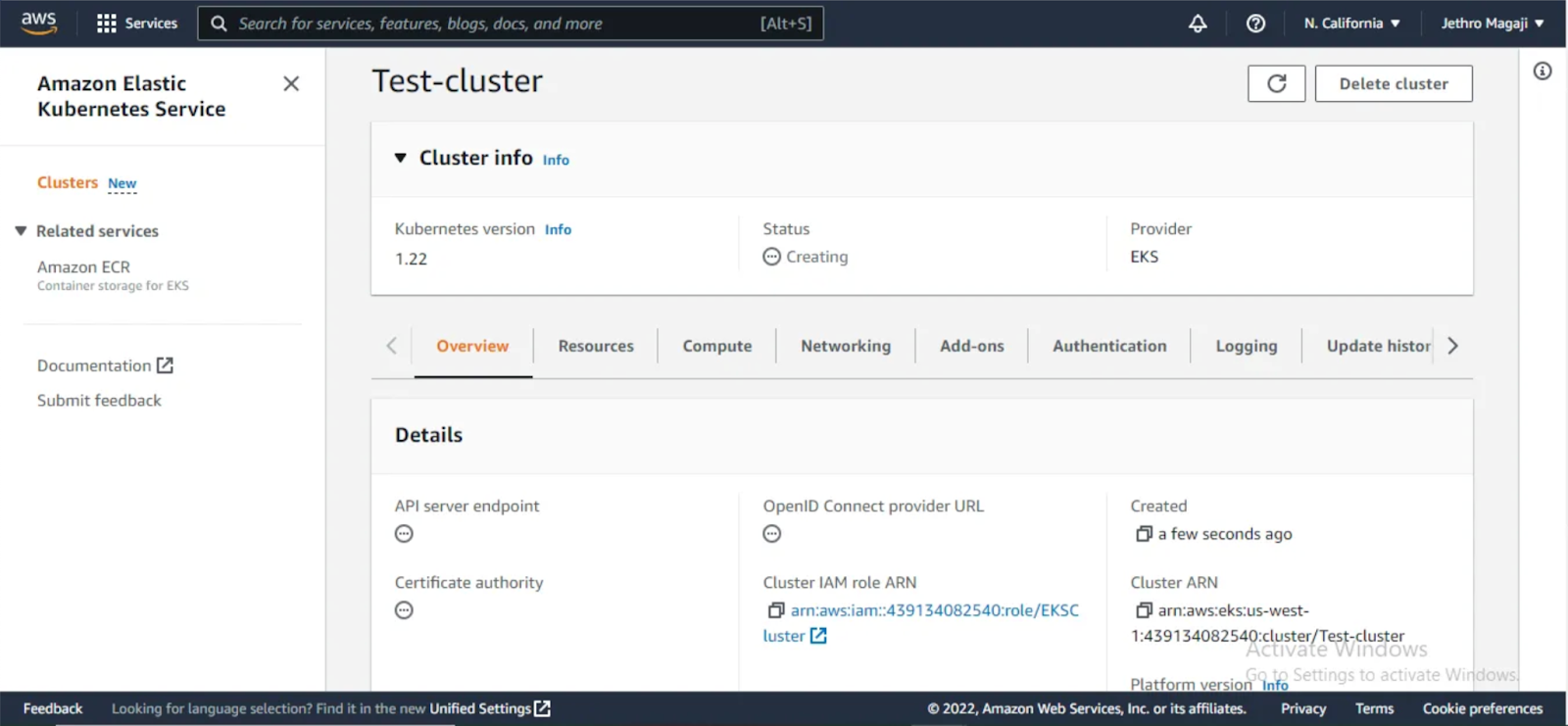

Create a Cluster on the Amazon EKS Dashboard

Creating a cluster on the EKS dashboard allows your containerized applications to run in multiple environments. To do this, navigate to the Amazon EKS dashboard by searching for “EKS” in the search bar, then click “Add cluster” and select “Create” to get started.

Configure the cluster by selecting and choosing the IAM role you created for the EKS cluster, then click “Next” to specify the network. Next, specify the network by selecting the virtual private cloud you have created. You can also leave the default values like the subnets, IP address, and endpoint access.

After specifying the network details in “Step 2”, then configure logging in “Step 3”, you can leave the default values and click “Next” to review and create the cluster in “Step 4”.

Connecting to the EKS Cluster from Your Local Machine

Once your EKS cluster is up and running, you can connect to it from your local machine to deploy applications. If you need a walkthrough, check out this video on how to deploy a web app to a Kubernetes cluster on AWS EKS.

How to deploy a web app to Kubernetes cluster on AWS EKS

Deploying a Kubernetes Cluster to Amazon EKS Using Your Local Machine

In this section, I’ll guide you through deploying a Kubernetes cluster to Amazon EKS from your local machine.

You’ll need:

- A Virtual Private Cloud (VPC)

- An IAM role

If you don’t already have these, scroll back to the “Deploying a Kubernetes Cluster to EKS Using the AWS Console” section for instructions on how to create them.

Step 1: Install and Configure the AWS CLI

First, you’ll need to install and configure the AWS Command Line Interface (CLI) to interact with Amazon EKS directly from your local machine.

- Download and install the AWS CLI here.

- Add the AWS CLI to your system path and configure it with your AWS credentials.

- Verify the installation by running the following command in your terminal:

Copy

aws --version

If the installation is successful, this command will display the installed AWS CLI version.

Setting Up an EKS Cluster Using the

eksctl

Command

To create a Kubernetes cluster from your local machine, you’ll need to set up an Amazon EKS master node using the

eksctl

command.

eksctl

is a simple CLI tool that automates the process of creating and managing clusters on Amazon Elastic Kubernetes Service (EKS).

With eksctl installed on your local machine, you can create a cluster with a single command, bypassing many manual steps involved in setting up the EKS architecture, which simplifies the process and saves time.

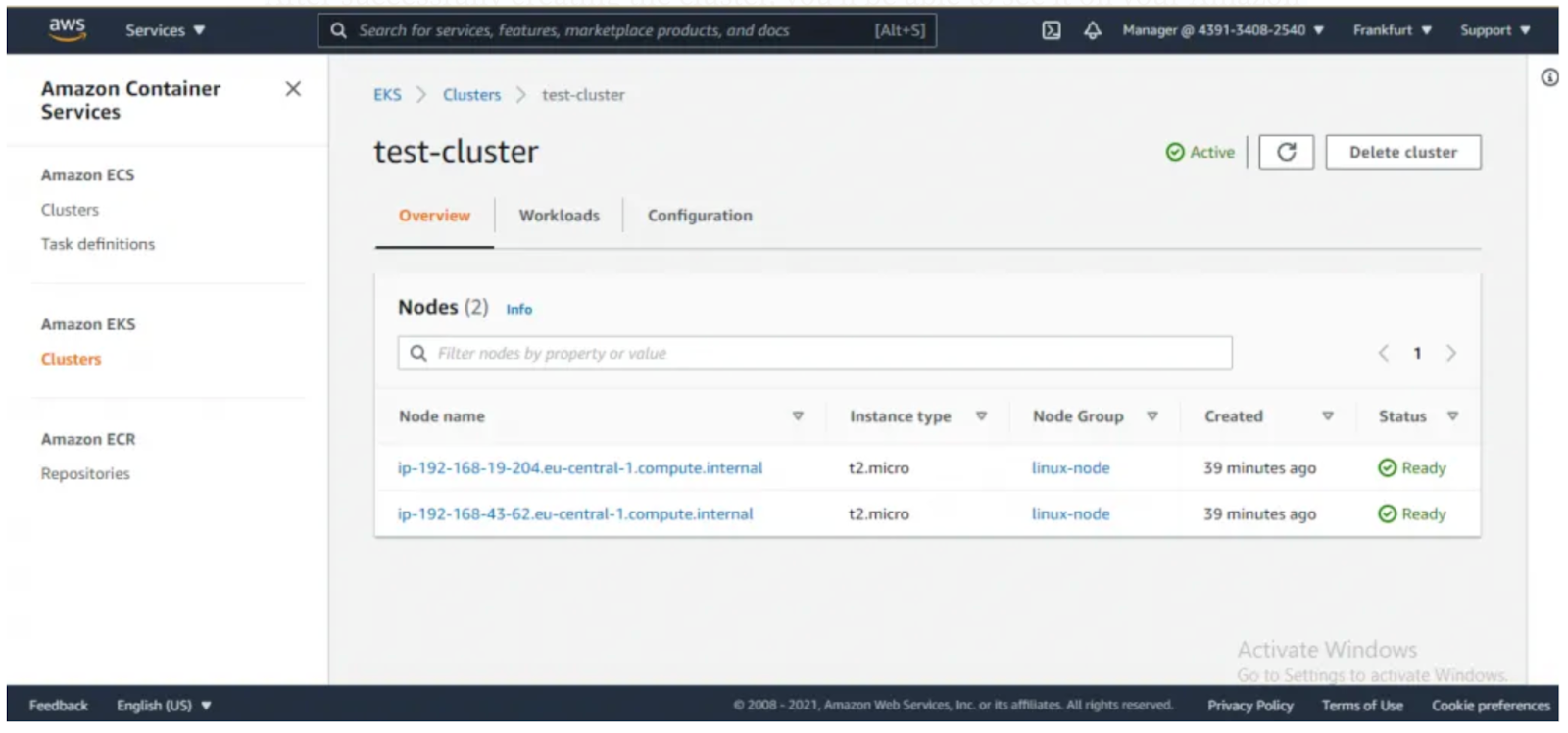

Use the following command to create an EKS cluster:

eksctl create cluster --name test-cluster --version 1.21 --region eu-central-1 --nodegroup-name linux-bode --node-type t2.micro --nodes 2

In this example:

- test-cluster is the name of the cluster.

- 1.21 is the Kubernetes version.

- eu-central-1 is the AWS region.

- linux-bode is the node group name.

- t2.micro is the node instance type.

- 2 is the number of nodes.

After successfully creating the cluster, you’ll be able to see it on your Amazon EKS console.

Conclusion

In this article, you’ve learned about the components of the Amazon EKS architecture and how to deploy a Kubernetes cluster on AWS using both the AWS Console and your local machine.

Reminder: Be sure to shut down any services or instances you created during this tutorial to avoid unexpected charges.

Simplified Kubernetes Management with Edge Stack API Gateway

Managing traffic in your Kubernetes cluster requires modern traffic management tools. That’s where Edge Stack comes in. It offers a modern Kubernetes ingress controller that supports multiple protocols, including HTTP/3, gRPC, gRPC-Web, and TLS termination.