With more and more people using web and mobile applications for everyday activities such as shopping, listening to music, and watching movies, applications nowadays must handle a tremendous workload. To ensure that your application can handle an expected user load, load testing must be executed before releasing the application.

However, implementing and debugging your load tests can be tricky. With many simulated requests, it could be daunting to find the root cause of your performance issues by scrolling into pages of the log files. Well, executing the load testing for your application does not need to be like that. This article will teach you how to implement and debug a load test for a Go application running inside a Kubernetes cluster using k6 and Telepresence.

Prerequisites

To effectively follow the steps discussed in this, you need to have the following:

- A ready-to-use Linux machine, preferably Ubuntu version 20.04

- Go, Docker Engine, kubectl & Visual Studio Code installed on your machine

- A DockerHub account to store the application image

What is load testing?

When it comes to performance testing, it’s easy to confuse load testing with stress testing. Stress testing is checking how your application behaves when the user load is beyond the volume it can handle. The expected result is your application should fall back to the normal state after the stress testing is done.

On the other hand, load testing ensures your application can handle an expected number of user requests in a specific time range. Load testing allows you to inspect your application’s behavior under a certain simulated workload. As a result, you will discover your app’s resource utilization issues and address them to ensure the user experience is not impacted by the heavy user load.

What is k6?

k6 is a performance testing tool created by the Grafana Labs team. k6 is built using Go programming language and uses Javascript for scripting your test scenarios. With k6, you can implement the test for different types of backend services, such as REST APIs, GraphQL APIs, gRPC services, and web sockets. You can also set threshold configs for your tests so that your test will fail if the app reaches the breaking point.

About the demo app

Let’s have hands-on practice on a demo application to understand how to implement and debug your load application test. The demo application is a blog service that allows users to:

- Create a new blog

- Update an existing blog

- Get all the blogs

- Get the blog content by its id

- Delete the blog by its id

The blog service will be built using the following:

- Go programming language

- PostgreSQL database to store the app data

- Kubernetes platform to manage and store the blog service and the PostgreSQL database instances

Now that you have an overview of how the demo application works, let’s move on to the next section to set up the Kubernetes cluster to deploy the app.

Set up the Kubernetes Cluster

To set up the Kubernetes cluster, you can have two following options:

- Set up from scratch on your infrastructure. This way, you can utilize your existing infrastructure and store all the application data on your servers. However, setting up and maintaining the Kubernetes cluster on your own is complicated and requires in-depth knowledge of how Kubernetes works.

- Set up the Kubernetes cluster using cloud providers’ services such as Amazon Web Service (AWS), Elastic Kubernetes Service(EKS), Google Kubernetes Engine (GKE), or Vultr Kubernetes.

You can create the Kubernetes cluster using either way, but I would suggest using the Kubernetes cloud provider for easier setup. After creating your own Kubernetes cluster, you should have a Kubernetes configuration file to access the Kubernetes cluster from your machine.

To access the Kubernetes cluster, open a new terminal in your machine and run this command: export KUBECONFIG=/path/to/the/config/file. Then, run the kubectl get node command to check whether you can access the Kubernetes cluster. You should see a similar output as below:

NAME STATUS ROLES AGE VERSION

telepresence-k6-c1d2a04201a1 Ready <none> 19h v1.26.2Now that you have a Kubernetes cluster that is ready for use, let’s move on to the next section to deploy the blog app to the Kubernetes cluster.

Deploy the application to the Kubernetes Cluster

To deploy the app to the Kubernetes cluster, you need to:

- Clone the application code to your machine

- Build the Docker image for your app

- Push your Docker image to DockerHub

- Deploy the PostgreSQL database to the Kubernetes cluster

- Deploy the blog app to the Kubernetes cluster

1. Clone the application’s code to your machine

The blog application code is at telepresence-k6-load-test GitHub repository. From the current terminal window, run the following commands to clone the application code:

mkdir ~/Projects -p

cd Projects

git clone https://github.com/cuongld2/telepresence-k6-load-test.gitYou should see the current structure of your application code as:

.

├── config

├── Dockerfile

├── go.mod

├── go.sum

├── handler

├── kubernetes

├── main.go

└── .vscode

├── .envFor context

- The .vscode directory stores the launch.json file. You will use the launch.jsonfile to set up the debugging environment for your application while executing the load testing.

- The config directory stores the db.go file for connecting to the PostgreSQL database.

- The handler directory has the handlers.go file for defining the functions to create, update, retrieve, and delete blogs.

- The kubernetes directory stores all the deployment files for deploying the PostgreSQL database and blog app to the Kubernetes cluster.

- The main.go file is the entry file of the application.

- The .env is the environment file that you will use for accessing the blog app service inside the Kubernetes cluster from your local Visual Studio Code.

- The go.mod and go.sum are for defining the dependencies that your application needs.

- The Dockerfile lists all needed dependencies and commands to build the Docker image for your blog app.

Now that you have the blog application code on your machine, let’s build the Docker image for the blog service.

2. Build the Docker image for the blog service

To build the Docker image for the blog app, run the following command below and replace the “username” field with your actual DockerHub’s username.

docker build -t username/blog .Wait a few minutes for the application image to be built, then move on to the next section to push the app image to DockerHub.

3. Push the app’s image to Dockerhub

First, you need to log in to your DockerHub account from the terminal by running the following command:

docker loginThen, run the following command to push the app image to DockerHub. Don’t forget to replace the username field with your username.

docker push username/blogNow that you have the application image in the DockerHub server, let’s move on to deploy the PostgreSQL database to the Kubernetes cluster.

4. Deploy the Postgresql database to the Kubernetes Cluster

From the current terminal, navigate to the kubernetes/postgresql directory by running this command cd kubernetes/postgresql .

To have your app data persisted, you need to deploy the PostgreSQL database using Kubernetes Persistent Volume. To create the Persistent Volume for your PostgreSQL database, run the following command: kubectl apply -f postgres-volume.yml. Then, run this command kubectl apply -f postgres-pvc.ymlto create the Kubernetes Persistent Volume Claim to request for storage inside the Persistent Volume.

The next thing you’ll need to do is to create the PostgreSQL init SQL queries. To do this, run this command kubectl apply -f postgres-init-sql.yml on your terminal and then proceed to deploy the PostgreSQl database to Kubernetes by running the kubectl apply -f postgres-deployment.yml command.

You need to expose the PostgreSQl database to enable the blog application to access it. To do this, run the kubectl apply -f postgres-service.yml command on your terminal.

Before proceeding to the next step, let’s confirm that the PostgreSQL database pods and services are running as expected and are up and running by running these commands kubectl get pod and kubectl get service . If all goes as planned, then you should see results similar to the one below:

NAME READY STATUS RESTARTS AGE

postgresql-5b4669bc56-vkdl8 1/1 Running 0 19hNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

postgresql ClusterIP 10.96.105.126 <none> 5432/TCP 19hHaving confirmed that the PostgreSQL database is running in the Kubernetes cluster, let’s move on to the next part to deploy the blog service.

5. Deploy the blog service to the Kubernetes cluster

From the current terminal, navigate to the kubernetes/blog-app directory by running this command cd ../blog-app. Then open the blog-app-deployment.yml file and replace username in the image:username/blog line with your actual DockerHub username.

After updating your DockerHub username, go back to your terminal and deploy the blog into the Kubernetes cluster by running the kubectl apply -f blog-app-deployment.yml command.

The blog app is up and running, but right now, we can only interact with it from inside the Kubernetes cluster. So, let’s make it accessible outside of the cluster by exposing it. To do this, run the kubectl apply -f blog-app-service.yml command.

Then, proceed to run the kubectl get service to check whether the service we just created is running as expected. If everything works as expected, you’ll get results similar to the one below, showing you that the service creation was successful.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

blogapp-service LoadBalancer 10.100.190.129 45.77.43.83 8081:32765/TCP 20h

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

postgresql ClusterIP 10.96.105.126 <none> 5432/TCP 19hNotice the EXTERNAL-IP value of the blogapp-service service. You will use this when implementing the load test for the blog app service.

Now that you have deployed the blog app and the PostgreSQL database to the Kubernetes cluster successfully let’s move on to the next section to implement the load test using k6.

Implementing the Load Test

To implement the load test using k6, first, you must install k6 on your machine. To do so, run the following command:

sudo gpg -k

sudo gpg - no-default-keyring - keyring /usr/share/keyrings/k6-archive-keyring.gpg - keyserver hkp://keyserver.ubuntu.com:80 - recv-keys C5AD17C747E3415A3642D57D77C6C491D6AC1D69

echo "deb [signed-by=/usr/share/keyrings/k6-archive-keyring.gpg] https://dl.k6.io/deb stable main" | sudo tee /etc/apt/sources.list.d/k6.list

sudo apt-get update

sudo apt-get install k6If you’re using MacOS, Windows, or a non-Debian-based Linux, please refer to the k6 installation page for details on how to install k6 in different environments.

Then, run this k6 version command to check whether k6 is successfully installed. On doing this, you’ll see a similar result as below:

k6 v0.43.1 (2023–02–27T10:53:03+0000/v0.43.1–0-gaf3a0b89, go1.19.6, windows/amd64)To implement the load test for your blog app service, you need to create a k6 script file. Let’s create a new file named telepresence-blog.js by running the command below

cd ../..

nano telepresence-blog.jsThen copy the following content to the telepresence-blog.js file:

import http from 'k6/http';

import { check, sleep } from 'k6';

export const options = {

stages: [

{ duration: '5s', target: 10 },

{ duration: '5s', target: 5 },

{ duration: '5s', target: 0 },

],

};

export default function () {

const data = JSON.stringify({ body: 'this is a great blog one',});

const domain = "http://45.77.43.83:8081";

const headers = { 'Content-Type': 'application/json'

};

const res = http.post(domain+'/blog',data,{ headers });

check(res, { 'status was 200': (r) => r.status === 200 });

sleep(1);

}Let’s go through the test scenario of this k6 script file. In the first two lines, you already imported the http, check, and sleep functions from k6.

- The http function is for creating the HTTP request so that you can interact with the blog APIs

- The check function is for checking whether the HTTP status code of the API equals 200

- The sleep function is for pausing the test for a short period. In real-world scenarios, real users usually wait for one or two seconds before doing the next action to the web app. Using the sleepfunction, you can simulate how real users interact with the service under test.

The export const optionblock code defines how the simulated users are generated. Here, you defined the users to increase to 10 in 5 seconds. Then, decrease the number of users to 5 in the next 5 seconds. Finally, you decrease the users to 0 in the last 5 seconds.

The export default functionblock code is to define what the simulated user will do. Basically, you tell the simulated user to make an API request to the API for creating a new blog post. Then you tell k6 to check whether the response status code equals 200. Finally, you tell the simulated user to wait for 1 second before doing the next iteration (After each default function block is executed, if the simulated user is still active, the user will try to execute the whole steps in the code block again).

Before running the test, you must replace “45.77.43.83” with your actual blog app service external-ip value. Save the file, then run the following command:

k6 run telepresence-blog.jsYou should see a similar test result as below:

data_sent………………….: 14 kB 881 B/s

http_req_blocked……………: avg=13.89ms min=0s med=0s max=392.07ms p(90)=75.83ms p(95)=79.1ms

http_req_connecting…………: avg=13.89ms min=0s med=0s max=392.07ms p(90)=75.83ms p(95)=79.1ms

http_req_duration…………..: avg=79.65ms min=68.32ms med=79.31ms max=108.36ms p(90)=84.96ms p(95)=86.57ms

{ expected_response:true }…: avg=79.65ms min=68.32ms med=79.31ms max=108.36ms p(90)=84.96ms p(95)=86.57ms

http_req_failed…………….: 0.00% ✓ 0 ✗ 78

http_req_receiving………….: avg=126.75µs min=0s med=0s max=1.61ms p(90)=467.49µs p(95)=947.57µs

http_req_sending……………: avg=14.95µs min=0s med=0s max=187.7µs p(90)=29.66µs p(95)=143.6µs

http_req_tls_handshaking…….: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_waiting……………: avg=79.51ms min=68.32ms med=79.08ms max=107.32ms p(90)=84.75ms p(95)=86.57ms

http_reqs………………….: 78 5.063333/s

iteration_duration………….: avg=1.09s min=1.07s med=1.08s max=1.46s p(90)=1.15s p(95)=1.16s

iterations…………………: 78 5.063333/s

vus……………………….: 1 min=1 max=10

vus_max……………………: 10 min=10 max=10With the above scenario, the average response time of each request is 79.65 milliseconds. There were 78 HTTP requests made, and none of them failed. But there’s a problem with our test script; we forgot to delete the blog post after creating it. Running a number of load test scenarios without cleaning up data will create a lot of unused data and might lead to performance problems for our database. To delete the blog post after it was created, add the following code below sleep(1) line:

const deleteRes = http.del(domain+'/blog/'+res.json().id)

check(deleteRes, { 'status was 200': (r) => r.status === 200 });

sleep(1);Let’s rerun the test for telepresence-blog.js file by running this command: k6 run telepresence-blog.js. This will return results similar to the one below:

✓ status was 200

checks…………………….: 100.00% ✓ 80 ✗ 0

data_received………………: 12 kB 764 B/s

data_sent………………….: 11 kB 663 B/s

http_req_blocked……………: avg=16.98ms min=0s med=0s max=415.69ms p(90)=92.29ms p(95)=108.22ms

http_req_connecting…………: avg=16.97ms min=0s med=0s max=415.69ms p(90)=92.29ms p(95)=108.2ms

http_req_duration…………..: avg=119.37ms min=69.74ms med=107.3ms max=511.6ms p(90)=157.32ms p(95)=179.86ms

{ expected_response:true }…: avg=119.37ms min=69.74ms med=107.3ms max=511.6ms p(90)=157.32ms p(95)=179.86ms

http_req_failed…………….: 0.00% ✓ 0 ✗ 80

http_req_receiving………….: avg=145.3µs min=0s med=0s max=1.1ms p(90)=605.84µs p(95)=895.41µs

http_req_sending……………: avg=24.8µs min=0s med=0s max=481.1µs p(90)=95.75µs p(95)=136.96µs

http_req_tls_handshaking…….: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_waiting……………: avg=119.2ms min=69.74ms med=107.07ms max=511.6ms p(90)=157.28ms p(95)=179.86ms

http_reqs………………….: 80 4.965341/s

iteration_duration………….: avg=2.27s min=2.16s med=2.24s max=3.01s p(90)=2.33s p(95)=2.35s

iterations…………………: 40 2.48267/s

vus……………………….: 1 min=1 max=10

vus_max……………………: 10 min=10 max=10The average response time has increased slightly to 119.37 milliseconds, and no HTTP requests have failed. However, the maximum response time of the request is 511.6 milliseconds, whereas previously, it was only 108.36 milliseconds.

We need to check the application log to debug why the maximum response increases to 511.6 milliseconds. However, we have a lot of logs showing in the application pod. Let’s use Telepresence to debug this performance problem to ease the debugging task.

Install Telepresence to your Kubernetes Cluster

Telepresence can be installed on all operating systems. Run the following command to install Telepresence if you are using a macOS or Linux machine, then use the installation details below. If not, go to Telepresence’s installation page.

sudo curl -fL https://app.getambassador.io/download/tel2/linux/amd64/latest/telepresence -o /usr/local/bin/telepresence

sudo chmod a+x /usr/local/bin/telepresenceTo check whether Telepresence is installed successfully, run the telepresence version command. This will return an output similar to the one below:

Note: Originally, this blog was published using v2.13.2 of Telepresence. Telepresence is now on v2.19.2 and this will work with the latest version.

Client : v2.19.2To allow Telepresence to interact with the Kubernetes cluster, you need to install the Telepresence Traffic Manager to the Kubernetes cluster by running the telepresence helm install command. Then, run this command kubectl get pod — namespace ambassador to confirm that it has been installed correctly.

To connect your local machine to the Kubernetes cluster, run the telepresence connectcommand. You should see a similar output in the console as the one below:

Launching Telepresence Daemon

Connected to context default (https://<your-Kubernetes-cluster-public-IP>)With Telepresence now installed and running, we can now use it to debug the blog app.

Using Telepresence to Debug the App

Before debugging the blog app, you need to intercept the requests sent to the blog app in the Kubernetes cluster to the blog app running on your local machine. To do this, run the following command:

telepresence intercept blogapp --port 8081:8081- env-file .envOn doing this, telepresence will start the intercepting process for the blog service and store all the environment variables of the blog service to .env file. You should see the following output as below:

Intercept name : blogapp

State : ACTIVE

Workload kind : Deployment

Destination : 127.0.0.1:8081

Service Port Identifier: http

Volume Mount Point : /tmp/telfs-230806768

Intercepting : all TCP requestsHere, you see the traffic agent has been installed to intercept the blog service. Let’s debug the blog service from Visual Studio Code.

Debug the blog app from Visual Studio Code

First, you need to install delve to debug the Go code. You can do this by running the command below:

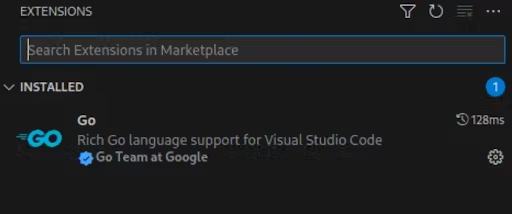

go install github.com/go-delve/delve/cmd/dlv@latestThen, install Go’s extension in your Visual Studio Code

Load Tests

Figure 1: Install the Go extension in Visual Studio Code

Inside the .vscode/launch.json file, you already have the configuration for debugging the blog service app.

{

"version": "0.2.0",

"configurations": [

{

"name": "Launch with env file",

"type": "go",

"request": "launch",

"mode": "debug",

"program": "${workspaceRoot}",

"envFile": "${workspaceFolder}/.env"

}

]

}Visual Studio Code will use the values in the .envfile to connect with the Kubernetes cluster and will run the blog app using the code files from the root directory of the application code.

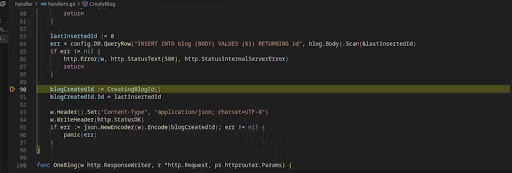

To debug the blog app, set breaking points to the lines of code that you want the debugging process to stop. Let’s set the breaking points to line 90 and line 180 inside the handlers.go file since we want to check the code processes related to creating a new blog and removing a blog post.

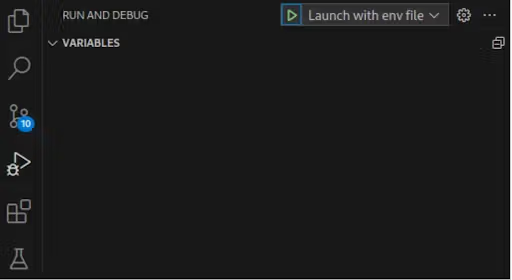

Click on the “Run and Debug” button on the left panel of the screen and click on the “Play” button to run the “Launch with env file” debug task.

Now that the debugging setup is done, let’s try to create a new blog post by running the following curl command:

curl - location 'http://45.77.43.83:8081/blog' \

- header 'Content-Type: application/json' \

- data '{

"body":"this is a great blog one"

}'curl - location 'http://45.77.43.83:8081/blog' \

- header 'Content-Type: application/json' \

- data '{

"body":"this is a great blog one"

}'You should see the debugger in Visual Studio Code stop at line 90 in the handlers.go file.

Looking carefully at the code inside the CreateBlog function, we don’t see anything wrong with it. So, let’s click on the “Continue” button in the Visual Studio Code app; we see that the curl process has stopped with the response containing information about the blog id.\

{

"id": 786

}Let’s try to remove this blog id using the delete blog API by running the command below:

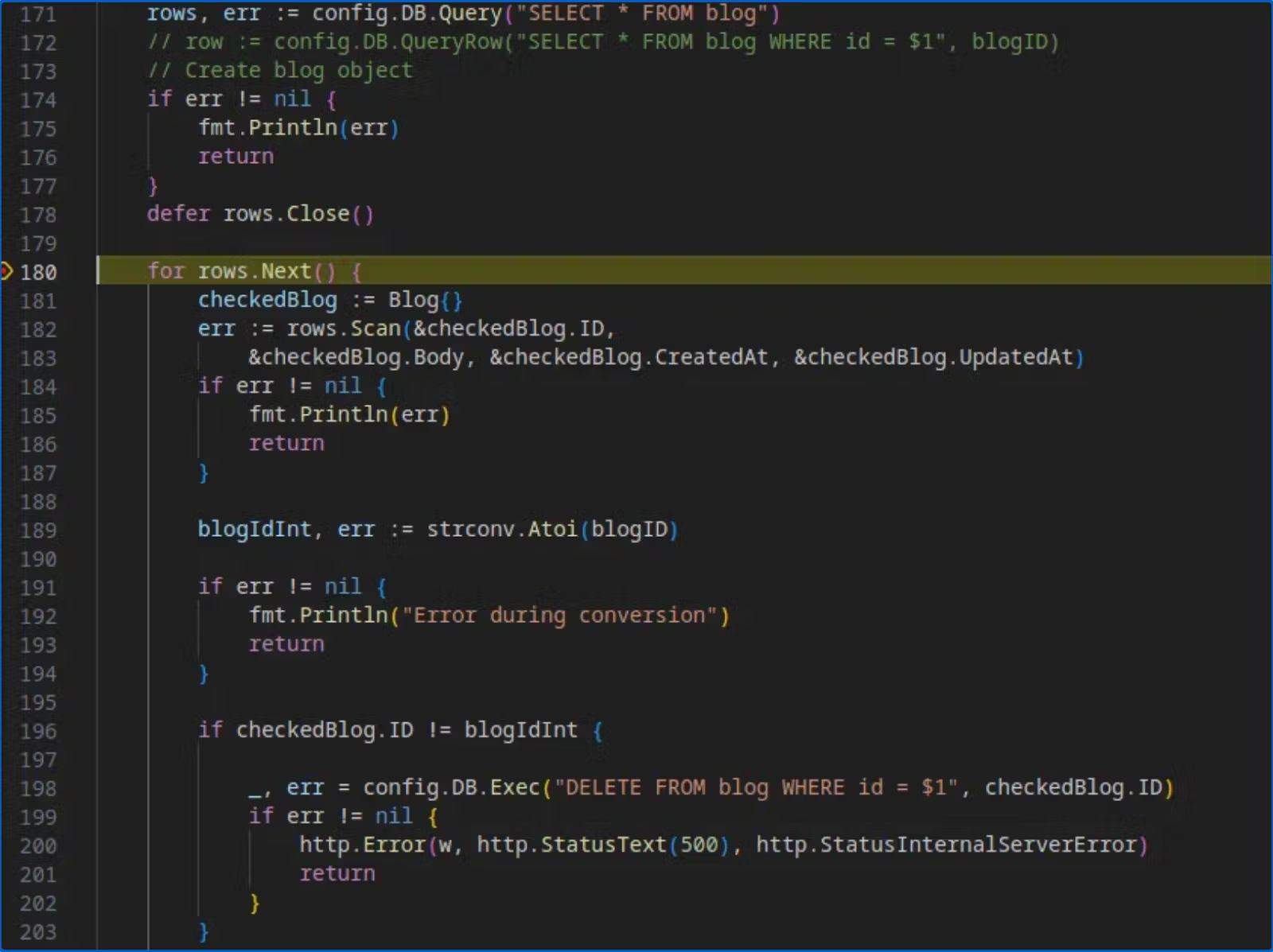

curl - location - request DELETE 'http://45.77.43.83:8081/blog/786'The Visual Studio Code debugger now stops at line 180 in handlers.go file.

Load Tests

Figure 4: Debugger jumps to line 180 in the handlers.go file

Carefully looking at the code inside the DeleteBlog function, you’ll see that the delete API will try to select all the existing blogs inside the blog table: rows, err:= config.DB.Query("SELECT * FROM blog") and then iterate the rows’ values using for rows.Next(). When iterating over the list of rows, if the blog id in the list does not equal the provided id in the URL of the delete API, the API will delete that blog.

Wait, we have a problem here. The API should delete the blog if the blog id in the list of blogs matches the provided ID in the API URL. This is why we have an API request that took more than 500 milliseconds to complete because the delete function will try to delete every blog except the one with the id provided in the API URL. Suppose we have 500 blogs in the database. The API will try to delete 499 blogs instead of just 1 blog.

Let’s click on the “Continue” button in the Visual Studio Code to complete this API request. Run the following command curl — location “http://45.77.43.83:8081” to check all the blogs we have now. This return results similar to the one below:

Load Tests

Figure 4: Debugger jumps to line 180 in the handlers.go file

Carefully looking at the code inside the DeleteBlog function, you’ll see that the delete API will try to select all the existing blogs inside the blog table: rows, err:= config.DB.Query("SELECT * FROM blog") and then iterate the rows’ values using for rows.Next(). When iterating over the list of rows, if the blog id in the list does not equal the provided id in the URL of the delete API, the API will delete that blog.

Wait, we have a problem here. The API should delete the blog if the blog id in the list of blogs matches the provided ID in the API URL. This is why we have an API request that took more than 500 milliseconds to complete because the delete function will try to delete every blog except the one with the id provided in the API URL. Suppose we have 500 blogs in the database. The API will try to delete 499 blogs instead of just 1 blog.

Let’s click on the “Continue” button in the Visual Studio Code to complete this API request. Run the following command curl — location “http://45.77.43.83:8081” to check all the blogs we have now. This return results similar to the one below:As we expected, the blog post was not deleted because the API will try to delete every other blog except the id is 786.

Find the solution to the performance issues

To fix the problem, we need to delete the blog if it exists in the blog table. To do this, replace the existing DeleteBlog function code with the code below. This code will only delete the blog if it exists instead of deleting all other blogs.

func DeleteBlog(w http.ResponseWriter, r *http.Request, ps httprouter.Params) {

blogID := ps.ByName("id")

row := config.DB.QueryRow("SELECT * FROM blog WHERE id = $1", blogID)

// Create blog object

deletingBlog := Blog{}

er := row.Scan(&deletingBlog.ID,

&deletingBlog.Body, &deletingBlog.CreatedAt, &deletingBlog.UpdatedAt)

switch {Save the file handlers.go, remove the breaking points in the Visual Studio Code, and run the debugger again. Let’s try to create a new blog by running the below command:

curl - location 'http://45.77.43.83:8081/blog' \

- header 'Content-Type: application/json' \

- data '{

"body":"this is a great blog one"

}'You should see the following output:

{

"id":788

}Let’s remove the blog with the id 788.

curl - location - request DELETE 'http://45.77.43.83:8081/blog/788'Now, let’s try to get all the blogs in the blog table.

curl - location 'http://45.77.43.83:8081'You should see the following output:

[

{

"id": 787,

"body": "this is a great blog one",

"created_at": "2023–05–24T06:12:00.416938Z",

"updated_at": "2023–05–24T06:12:00.416938Z"

}

]The blog with id 788 has been deleted. Congratulations, you have successfully resolved the problem.

Conclusion

Through the article, you have learned how to implement load tests and debug performance problems. With the help of Telepresence, debugging the load test problem is a breeze