Strategies for planning and implementing a migration from virtual machines to a cloud-native platform

An increasing number of organizations are migrating from a datacenter composed of virtual machines (VMs) to a “next-generation” cloud-native platform that is built around container technologies like Docker and Kubernetes. However, due to the inherent complexity of this move, a migration doesn’t happen overnight. Instead, an organization will typically be running a hybrid multi-infrastructure and multi-platform environment in which applications span both VMs and containers. Beginning a migration at the edge of a system, using functionality provided by a cloud-native API gateway, and working inwards towards the application opens up several strategies to minimize the pain and risk.

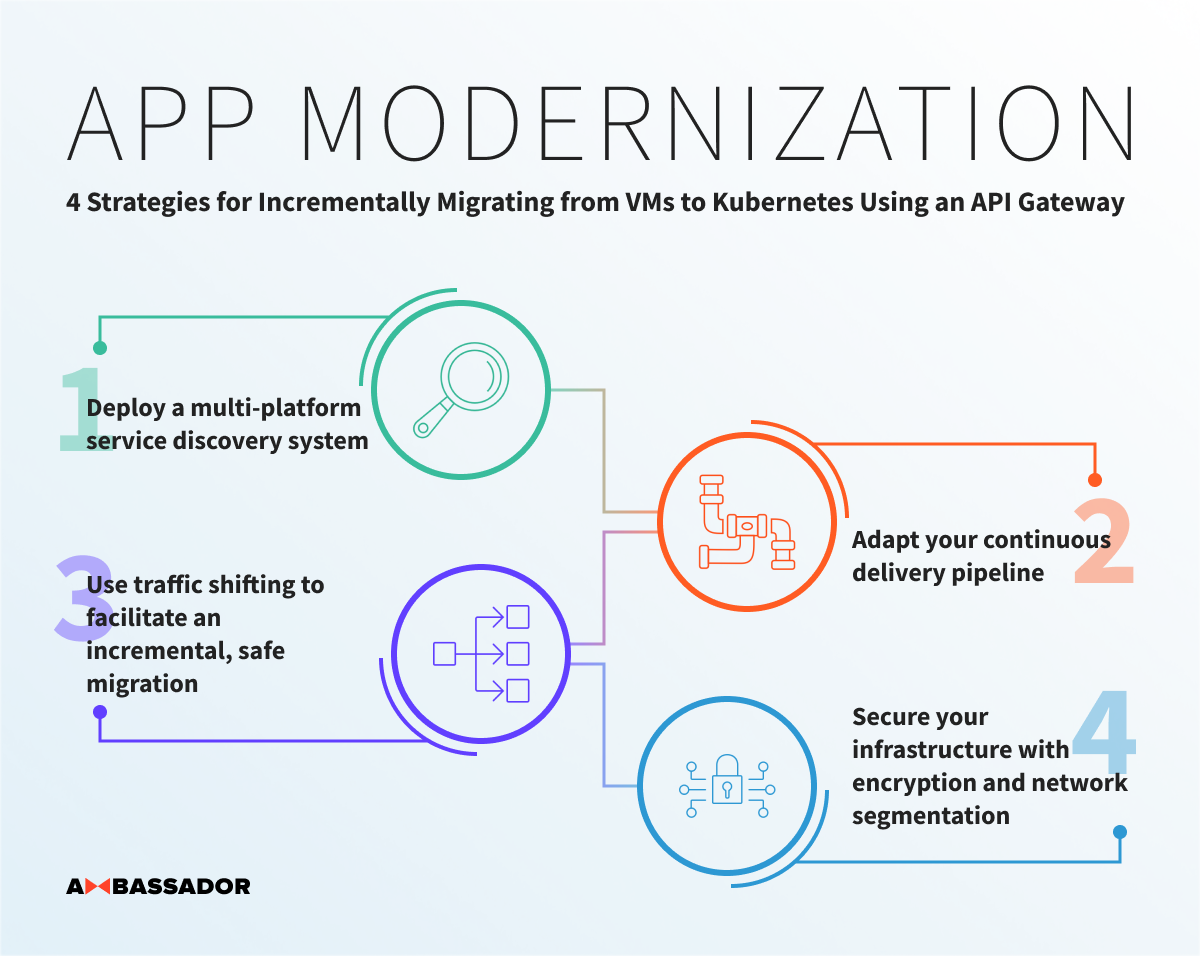

In a recently published article series on the Ambassador blog, four strategies related to the planning and implementation of such a migration were presented: deploying a multi-platform service discovery system that is capable of routing effectively within a highly dynamic environment; adapting your continuous delivery pipeline to take advantage of best practices and avoid pitfalls with network complexity; using traffic shifting to facilitate an incremental and safe migration; and securing your infrastructure with encryption and network segmentation for all traffic, from end user to service.

Strategy 1: Deploy a multi-platform service discovery system

During a migration to cloud and containers it is common to see a combination of existing applications being decomposed into services and new systems being designed using the microservices architecture style. Business functionality is often provided via an API that is powered by the collaboration of one of more services, and these components therefore need to be able to locate and communicate with each other.

In a multi-platform and multi-cloud environment two categories of challenges present themselves:

- Routing traffic effectively across a range of different platforms and infrastructure, including multiple Kubernetes clusters.

- Continually updating the location of services that are deployed within dynamically scheduled container runtimes like Kubernetes and running on (ephemeral) commodity cloud infrastructure.

The edge of the system is a good place to solve these two challenges, as all user traffic passes through some form of gateway or proxy stack and can be routed accordingly. For example, using a combination of an API gateway with a multi-platform service discovery solution.

Strategy 2: Adapt your continuous delivery pipeline

An organization can’t expect to migrate their existing applications to a new platform overnight. There are simply too many moving parts and too much complexity within a typical existing legacy/heritage stack. Any migration needs to be planned and undertaken in an incremental fashion, and the best practices for building and deploying of applications encoded into a continuous delivery pipeline.

Key requirements that support the adaptation of a delivery pipeline for multi-platform and multi-cloud:

- The ability to create and initialize Kubernetes clusters on-demand for Q&A, and be able to route traffic dynamically into these cluster.

- A capability to launch migrated applications for automated verification that runs in a production-like (or ideally production) environment without exposing the applications to end users.

- Support for the incremental rollout of functionality on the new platform. Ideally customer traffic can be shifted or split to allow small amounts of users to be served by applications running on new infrastructure, as this will allow a low risk verification of the migration.

Decoupling the deployment and release of new functionality is a fundamental part of continuous delivery, and typically the implementation of this begins at the edge.

Strategy 3: Use traffic shifting to facilitate an incremental, safe migration

Once a migration plan has been created and agreed within an organization, it then has to be implemented. An effective strategy for undertaking the migration is to deploy an API gateway on the new platform (e.g., Kubernetes) and use that API Gateway to route traffic to existing platforms.This strategy allows operators and engineers to gain familiarity with the new target environment, but does not require the implementation of a “big bang” migration of applications from one platform to another. Once the API gateway is deployed, the gateway can be used to gradually shift traffic from existing applications to new services.

Functionality required when incrementally migrating applications and traffic routing:

- Decentralized and self-service configuration of ingress (north-south) traffic routing, which enables product, API, and application teams to independently plan and implement their migration.

- The ability to implement traffic shifting, using capabilities such as canary release routing and traffic shadowing.

- Support for routing service-to-service (east-west) traffic across multiple infrastructure types (and clouds), platforms, and clusters. The ability to route east-west traffic only within Kubernetes does not support this incremental migration approach.

- The ability to provide centrally configured “sane defaults” for traffic management, such as the requirement for globally implemented transport encryption (including a minimum TLS version), authentication via third-party IdPs, and rate limiting to prevent DDoS attacks.

Using a service mesh to implement advanced traffic routing is becoming a popular approach. It is worth mentioning that although similar at first glance, an API gateway and service mesh are targeting different use cases and have differing requirements.

Strategy 4: Secure your infrastructure with encryption and network segmentation

Implementing security within software delivery is a very important topic, and also somewhat vast, but the importance of understanding only increases during a migration to a new platform. Designing security in at all levels of the process is essential, from design, to coding and packaging (including dependency management), and ultimately to deployment and operation. At the runtime level, implementing defence in depth is vital. As part of any migration, traffic flowing across infrastructures and platforms must be secure. Any implementation should include appropriate use of user authentication at the edge, and also encryption of traffic flowing from the edge and between services.

Security requirements for a multi-platform migration include:

- The capability to deploy and manage transport encryption components (and be able to rotate related certificates regularly) to any target infrastructure, cloud, and platform.

- The ability of an API gateway (performing user authentication) to be able to tightly integrate with the service-to-service transport security, ensuring that there are no gaps in encryption for traffic flowing from the edge to a service.

As mentioned previously, the deployment of a service mesh is becoming a common approach to implementing end-to-end transport security. As this practice, and associated technologies, are relatively new, it is important that engineers “mind the gap” during any incremental migration, and not accidentally expose user traffic during holes or cracks within an implementation.

Wrapping up

This article series and associated multi-platform code/config examples have been written to help platform operators and engineers when migrating applications from VMs to Kubernetes. As discussed, a migration is not a trivial exercise, and it is important to plan accordingly, and engage with an implementation incrementally and securely.

The example code is continually evolving, and so please get in touch with the Datawire team with any particular requests for cloud vendors or complicated routing scenarios.