A Comprehensive Guide to Cloud Native Apps

Introduction to Cloud-Native Apps

Common Cloud-Native App Architectures

Cloud-Native App Benefits

The Fallacies of Distributed Computing

Common Challenges for Cloud-Native Apps

A cloud-native app has been designed and written specifically to run in the cloud and take advantage of this type of infrastructure's properties. An organization can consider itself “cloud native” when it has also adopted supporting DevOps workflows and practices to enable greater agility, increased speed, and reduced issues for both the app and the organization.

In common cloud-native app architectures, each is composed of several loosely-coupled and highly-cohesive microservices working together to form a distributed system. Loosely coupled means that an individual microservice can be changed internally with minimal impact on any other microservices. Highly-cohesive microservices are built around a well-defined business context, and any modifications required are typically focused on a single area of responsibility or functionality.

Cloud native applications are often packaged and run in containers. The underlying cloud infrastructure often runs on shared commodity hardware that is regularly changing, restarting, or failing. This means that a microservice should be designed to be temporary. It should start quickly, locate its dependent network services rapidly, and fail fast.

Dividing an app into numerous microservices increases its flexibility and scalability, but the potential downside is reduced availability due to the app’s increased complexity and dynamic nature. Go here to learn more about the app’s security and observability and monitoring its state when an app is divided into more pieces.

Now let’s take a closer look at cloud-native apps.

Introduction to Cloud-Native Apps

If an organization wants to become cloud native, it doesn’t just mean that all its applications have been designed and written specifically to run in cloud environments. In addition, it also means that the organization has adopted development and operational practices that enable agility for both the app and the organization itself.

The cloud-native app architecture evolved in response to many of the common bottlenecks that slow down the development and release of monolithic applications, namely avoiding code “merge hell” that follows from a large number of engineers working around a single codebase, enabling an independent release of individual services, and providing a limited area to investigate -- a blast radius -- when a component does fail. The cloud native approach enables development and operational staff to rapidly make localized decisions related to their defined responsibilities and carry those decisions out.

DevOps workflows combine software development and operations to enable faster, more frequent software releases with a lower rate of failure, aiming to achieve the principle of continuous delivery.

In common cloud native app architectures, apps are typically written as several independent microservices, each performing a different function. The microservices are deployed and run within container technologies in the cloud. The microservices communicate across the cloud to collectively provide the app’s functionality, working together to form a distributed system.

Common Cloud-Native App Architectures

Before cloud-native apps, an app’s architecture was often a single “monolithic” entity that consisted of a single service providing many functions. The cloud-native approach means that instead of being a single service, the app comprises multiple microservices, each performing a well-defined single function or small set of functions.

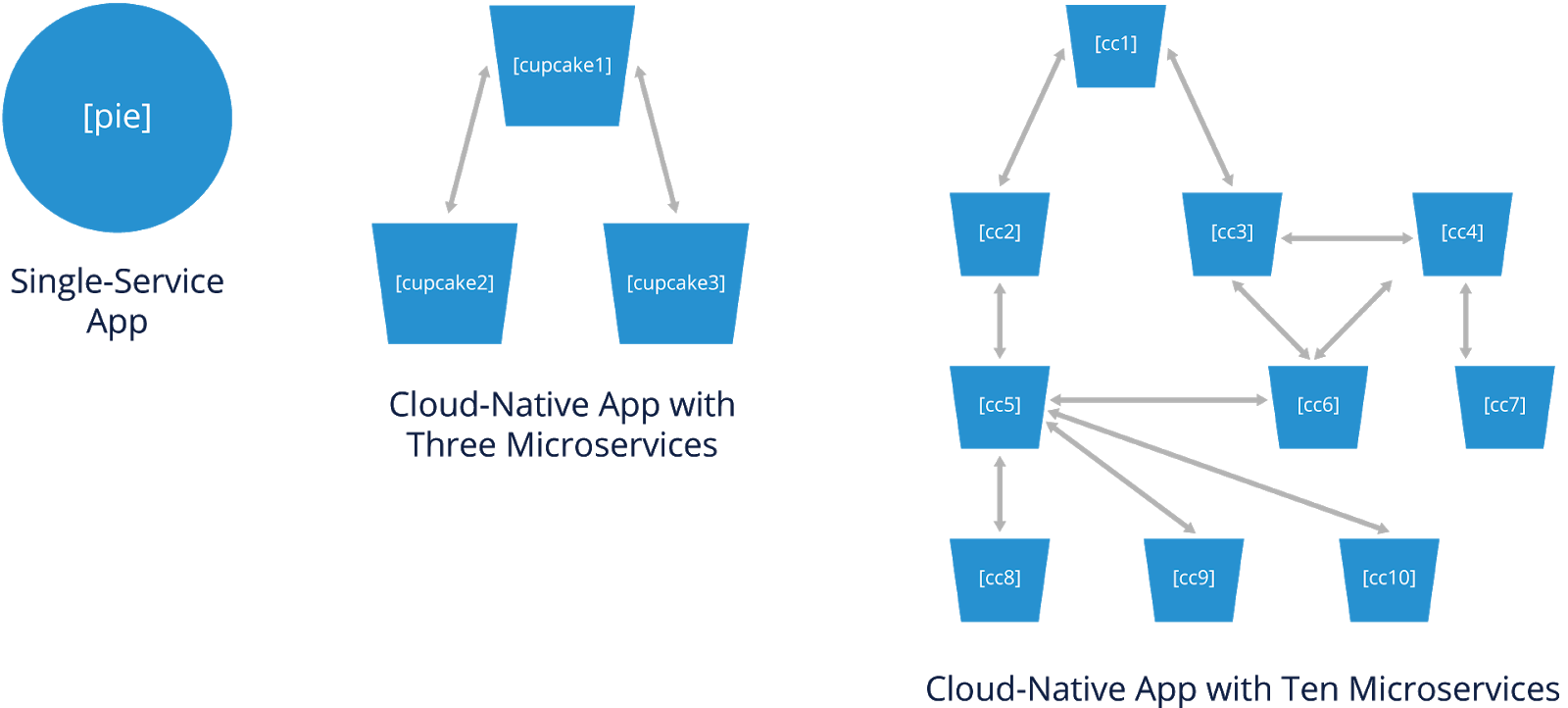

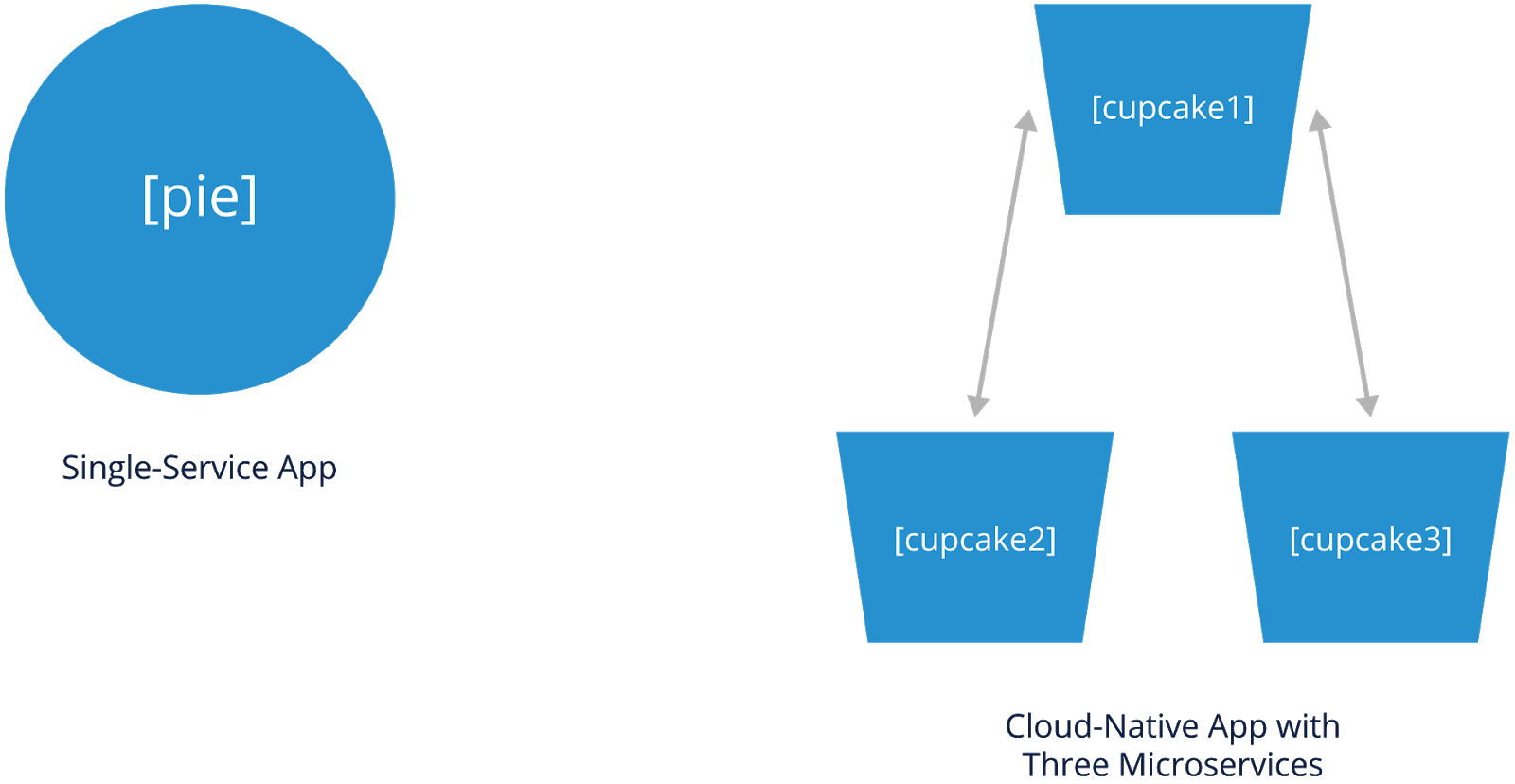

The diagram below shows two examples: single-service and cloud-native apps comprising three microservices. The single-service app is depicted by a pie, reflecting that the app is a single entity that must be made as a whole. The cloud-native app is depicted as cupcakes, meaning that each cupcake (microservice) is independent of the others and can have a different recipe (language, etc.).

Each microservice is decoupled from the others, meaning that any microservice can be changed internally without needing to change any other microservices. So you can change the recipe for one cupcake without changing the recipes for the others. With a pie, changing the recipe affects the whole pie.

Most microservices are also designed to be ephemeral, meaning that they can safely and rapidly stop and start at an arbitrary time. For example, an underlying virtual machine that a service is running on may restart, triggering a restart of all the processes and applications running on that VM.

In the cloud native app, two microservices communicate with the third microservice. The single-service app and the cloud-native app can provide the same functionality.

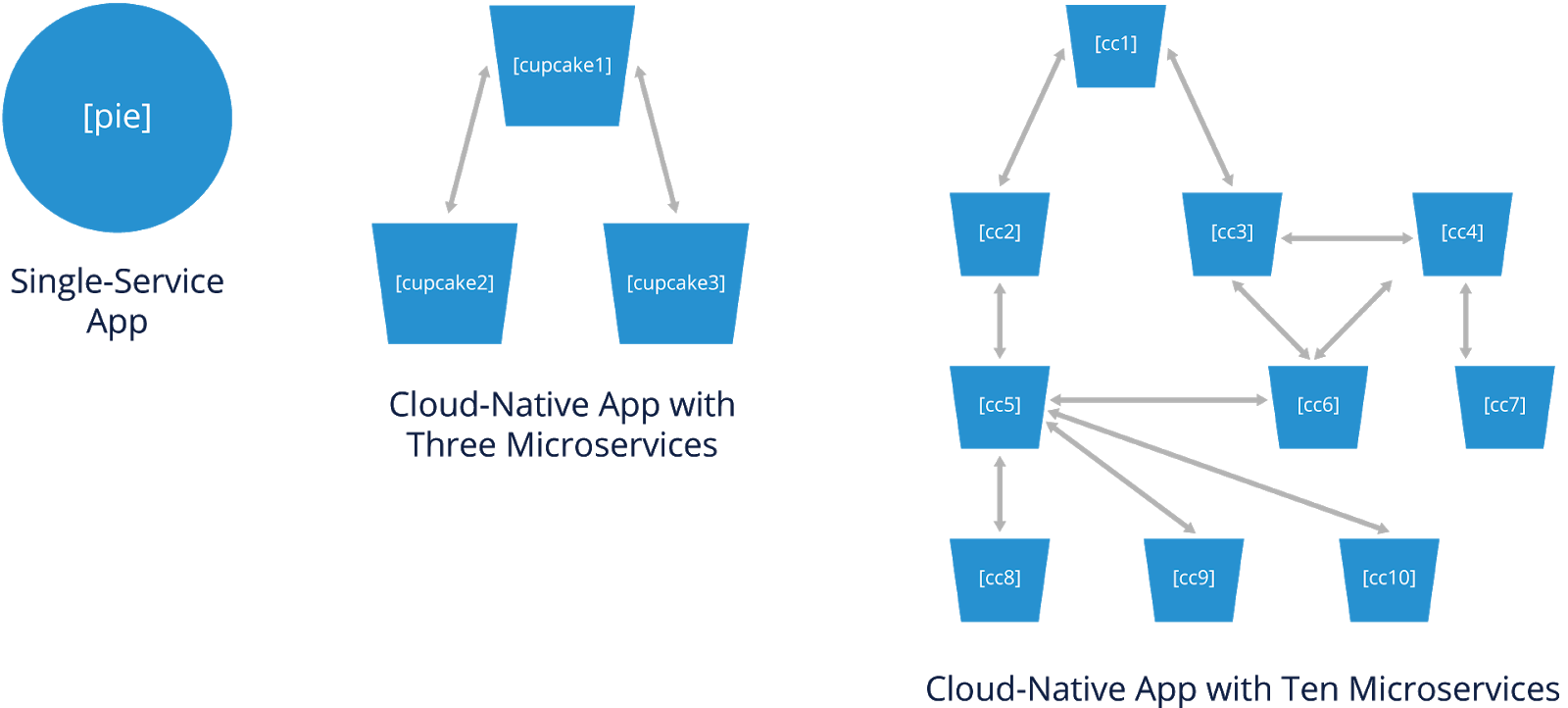

The diagram below adds a third example: an app with ten microservices, each independent of the others, depicted as ten different types of cupcakes. This hints at how much more complex a cloud-native app’s architecture could be. For example, some microservices communicate with more than one other microservice. All 10 microservices are part of a single distributed system. Each of the 10 microservices can be anywhere as long as it can communicate with the others it needs to.

Cloud-Native App Benefits

From a developer's viewpoint, the primary benefit of cloud-native apps over other apps is that each part of the cloud native app can be developed and deployed separately from the others. This enables faster development and release of apps. Also, returning to the pie versus cupcake analogy, in cloud-native apps, each microservice can be customized and tailored to meet specific needs. Customizing one cupcake doesn't affect the others.

To illustrate benefits for developers, let’s look at two scenarios.

Scenario 1: Suppose a cloud-native app consists of 10 microservices, each developed by a different team and loosely coupled with the others. If one of those teams wants to add a new feature, it revises its microservice, and then the updated microservice can be shipped immediately, thus achieving continuous delivery.

The same is true for all teams in this scenario, so no team will be delayed because they have to wait for another team to work on something unrelated.

Scenario 2: Suppose that a single-service app provides the same functionality as the cloud-native app from Scenario 1. Ten teams develop parts of the single-service app. If one of those teams wants to add a new feature, it has to wait until the next scheduled release of the app, which depends on the other teams completing their current development work and whether or not their work is related to the first team’s.

For the first team, there could be a delay of days, weeks, or months before their new feature finally ships. Delays from one team will affect all other teams--one team falling behind schedule could hold up all others from releasing their new features.

So cloud native apps allow organizations to be more agile and innovative, immediately taking advantage of new opportunities.

From an operations viewpoint, the primary benefit of cloud-native apps is that each microservice comprising the cloud-native app can be easily deployed, upgraded, and replaced without adversely affecting the other parts of the app. Each microservice should be independently releasable and deployable.

Additional copies of each microservice can be deployed or retired to scale the app up or down to meet demand. New microservice versions can be rolled out, and users can transition to them without an entire app outage. Copies of the same microservice can also be running in multiple clouds.

If developers follow established cloud-native best practices, such as designing applications to support Heroku’s Twelve Factor App principles, this further increases the operability of applications. Examples include:

- Treating backing services (database, messaging, caching, etc.) as attached resources.

- Executing the application as one or more stateless processes.

- Maximizing robustness with fast startup and graceful shutdown.

The Fallacies of Distributed Computing

As we mentioned earlier, cloud-native applications are typically decomposed into individual microservices. This decomposition of business logic results in a distributed system, as the microservices need to communicate with each other over a network for the application to function.

Cloud native apps--and the cloud, for that matter--didn’t exist when that list of fallacies was compiled. Several of those fallacies are potentially relevant to cloud-native app adoption today, including the following:

- “The network is reliable.

- Latency is zero.

- Bandwidth is infinite.

- The network is secure.”

Things can and will go wrong with any app, but there are more opportunities for distributed app failures than non-distributed ones.

Common Challenges for Cloud-Native Apps

Let’s look closely at some common challenges cloud-native apps can pose.

The first of these challenges is being able to find all the microservices that are dynamically deployed. As microservices are migrated from one place to another, and additional instances of microservices are deployed, it becomes increasingly difficult to keep track of where all the instances are currently deployed at any given time. This challenge is commonly referred to as service discovery.

A second challenge involves security and observability. Cloud-native apps are inherently more complex. This makes them harder to secure, because they have larger attack surfaces and more logical pieces to protect. This also makes it harder to monitor how the app operates and understand what happens when things go wrong, especially for app debugging purposes.

With an application built using a monolithic architecture, a crash in one component typically crashes the entire application. A microservice-based architecture enables an isolated crash in one service, thus limiting the blast radius and preventing the entire application from crashing. However, it is quite possible that a failure in one service can cascade throughout the entire application if this is not handled properly. The more services there are, the more chance of a partial failure turning into a system failure, which decreases availability and increases the application’s downtime.

Take another look at the diagram we examined earlier, and think about it in the context of each of these challenges.